The Salesforce Spring ‘23 release is around the corner. This release seems to be packed with plenty of great news. While few of the features that were scheduled for a later release seem to have been preponed, there is other amazing stuff that is visible in the beta version. Overall the bag of goodies seems to be loaded and the anticipation is high!

As a tradition, Salesforce makes major releases three times a year. These releases are awaited with lot of expectation as they are intended to offer new features and technology updates that the admins can leverage. I feel that the Spring ‘23 release will definitely help drive productivity and boost security. While building great user experience (UX) seems to have been factored, I see lots of opportunities for building fantastic apps for both internal and external stakeholders.

This article focuses on the 10 most important features of the Salesforce Spring’23 release for Admins, according to me.

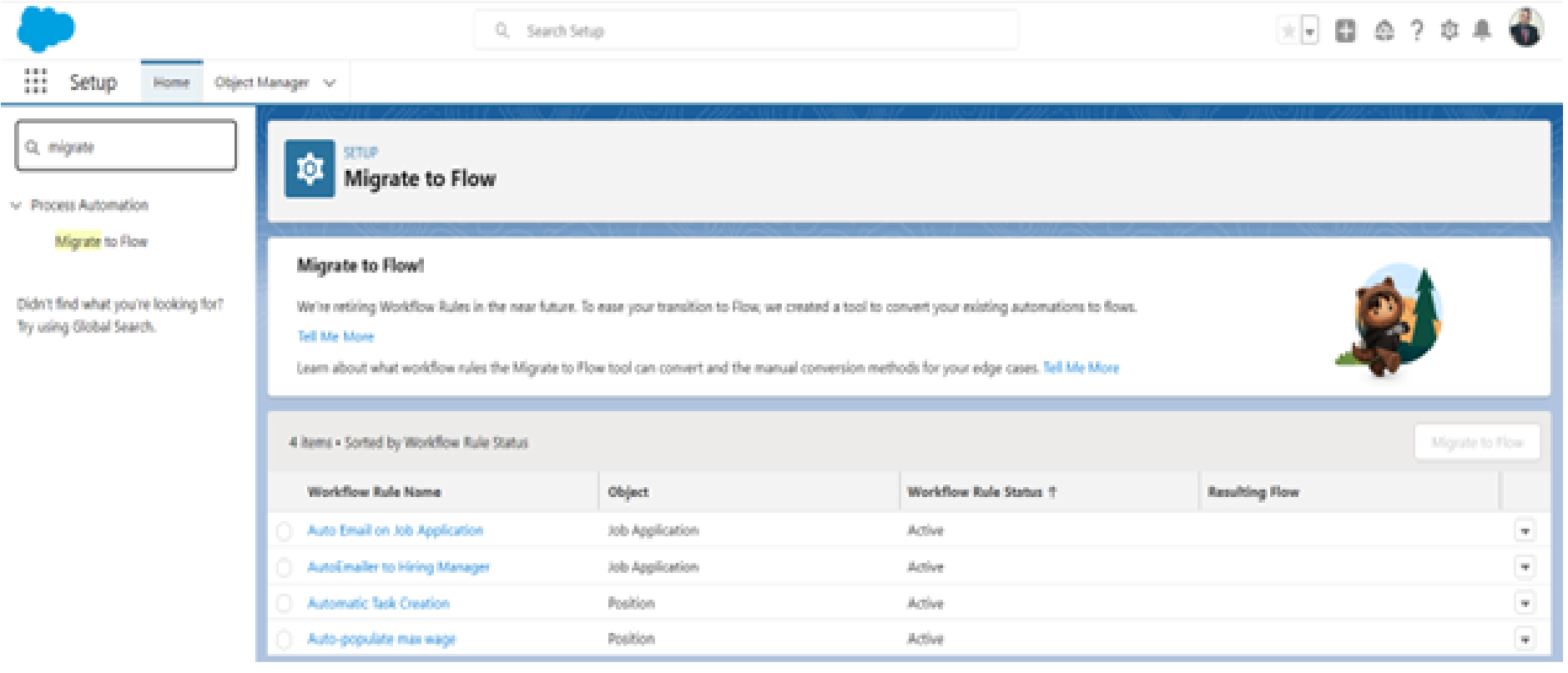

1. Migrate Process Builder to Flow

In Dreamforce 21 Salesforce announced the retirement of Workflow and Process Builder and scheduled the release of migration tools to Flow. As of now, only migration of workflow is available.

With the release of Spring 23, salesforce is releasing the updated Migrate to Flow tool that will support the migration of Process Builder. You can now use the tool to convert Process Builder processes to Flows.

The updated Migrate to Flow tool can help your transition to Flow Builder. In addition to workflow rules, you can now use the Migrate to Flow tool to convert Process Builder processes into flows. Flows can do everything that processes can do and more.

From Setup, in the Quick Find box, enter Migrate to Flow, and then select Migrate to Flow. On the Migrate to Flow page, select the process that you want to convert into a flow, and then click Migrate to Flow. Then select the criteria that you want to migrate to the flow. After the process is migrated, you can test the flow in Flow Builder. Test the new flow, and if everything works as expected, activate the flow and deactivate the process you converted.

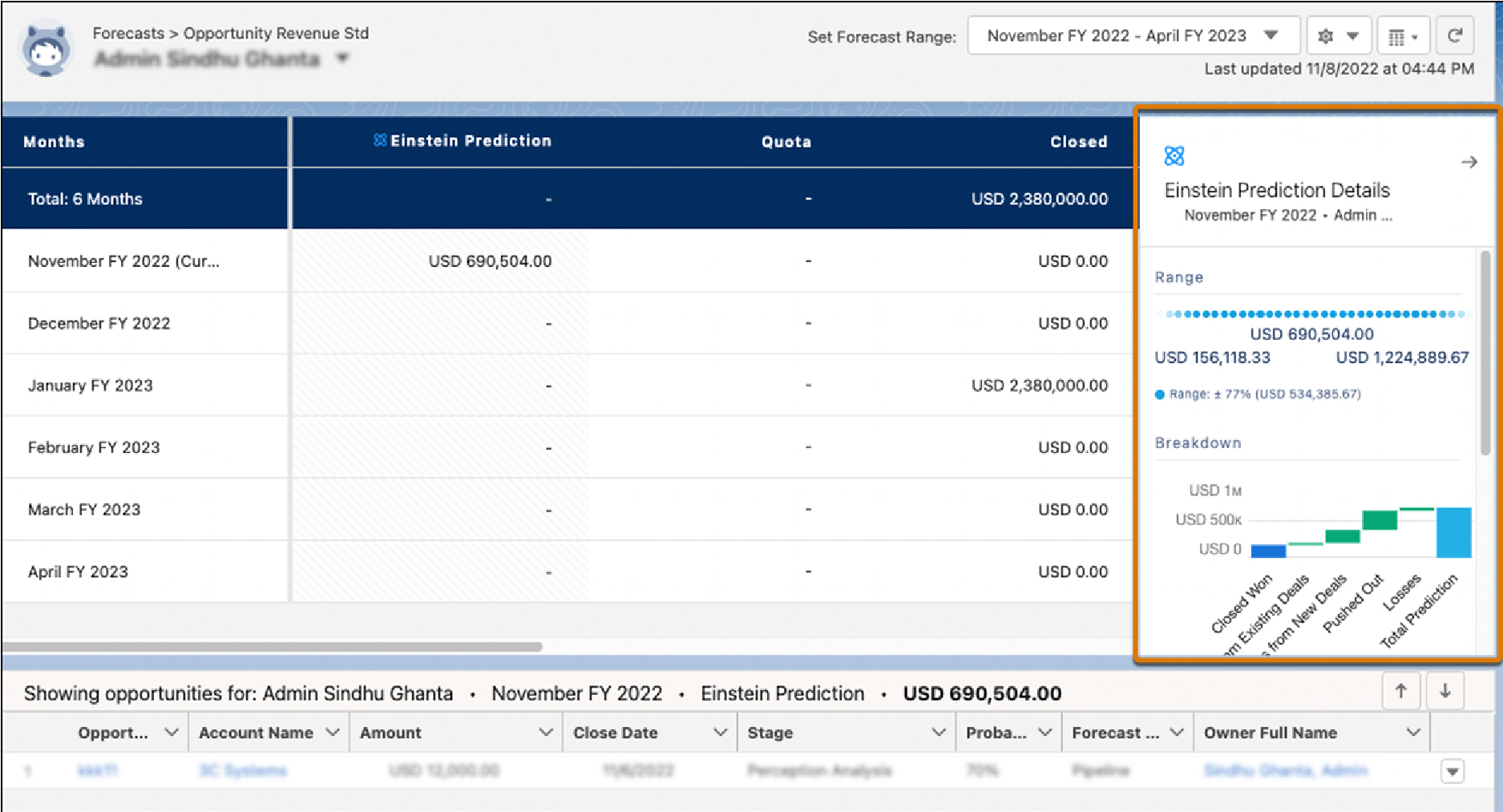

2. Build Custom Forecast Pages with the Lightning App Builder

Forecasting in Sales Cloud has seen a good number of updates over the last few releases. In Spring ‘23 you can design and build custom forecast pages using the Lightning App Builder.

As a result of the ease of building flexipages that the

gives, you can build pages using standard and custom components. Your page designs can evolve as fast as your sales processes. You can create and assign different layouts for different users.

3. Collaborate on Complex Deals with Opportunity Product Splits

In complex business transactions or negotiations, generally there is no single person responsible for the closure of the deal, as it involves an entire team. Splitting the opportunity allows one to track credit across multiple team members. Earlier such splits were possible with Opportunity only. Now with the Spring’23 release, the split at Product level will also be available.

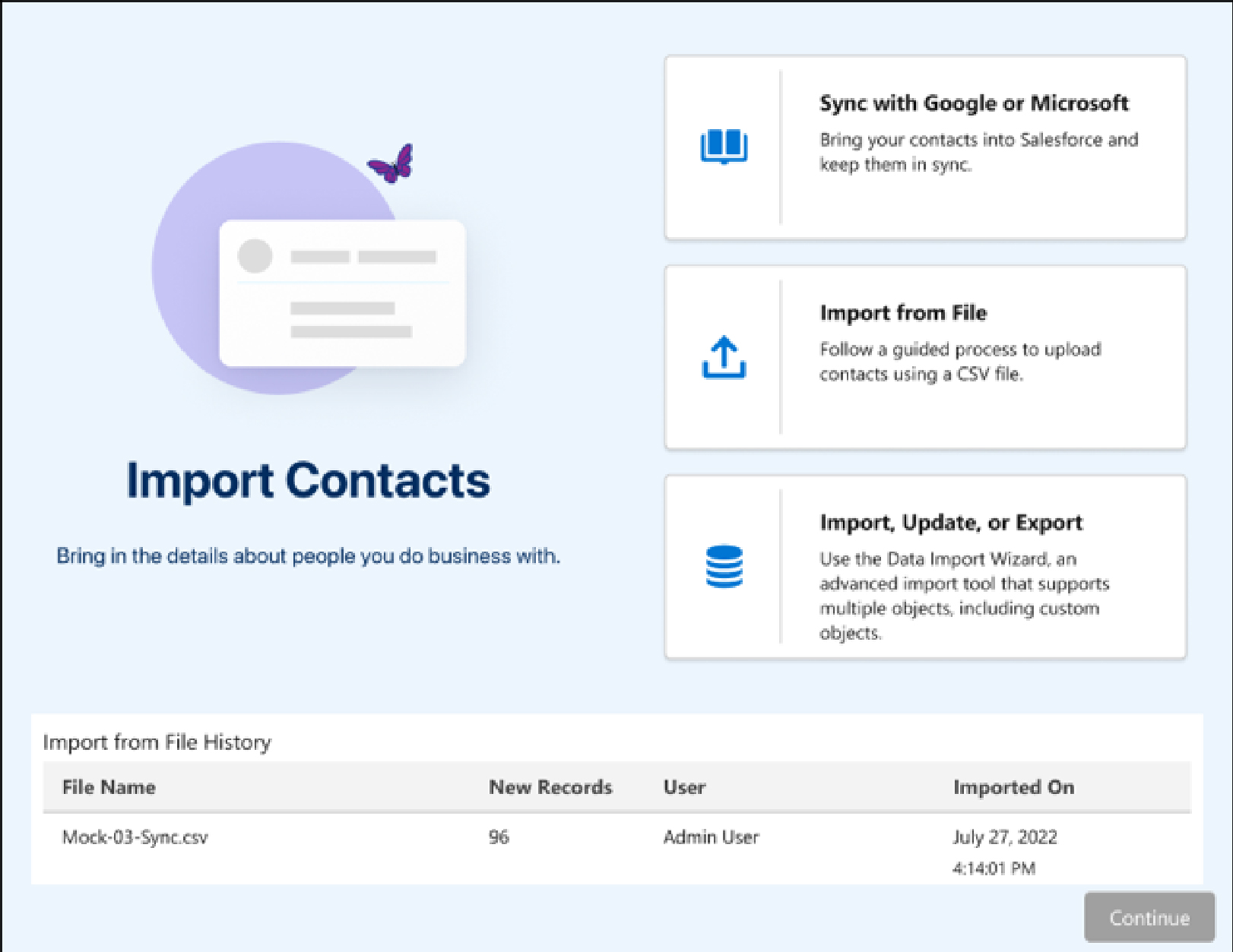

4. Importing Contacts and Leads with a Guided Experience

With new Guided Experience, when users select to import contacts or leads, they are now presented with multiple options to import data, depending on their assigned permissions.

The new wizard provides a simple interface that allows the steps to import a CSV file

5. Dynamic Forms for Leads AND Cases

The Salesforce Dynamic Forms is an early release by salesforce. With Dynamic Forms, now case and lead record pages can be configured to make these more robust. Earlier this capability was available only for account, contact and opportunity record pages.

6. View All for Dynamic Related Lists

With the Spring ’23 release, Dynamic Related Lists will include the ability of “View All”. This link will enable users to see the complete list of related records.

7. Dynamic Actions for Standard Objects

Now Dynamic Actions are available for all standard objects. Earlier it was available only for Account, Case, Contact, Lead and Opportunity.

Dynamic Actions will enable to create intuitive, responsive, and uncluttered pages which display only the actions your users need to see based on the criteria you specify.

Instead of scanning an endless list of actions, users will be presented with a simple choice, relevant to their roles and profiles, or when a record meets some criteria.

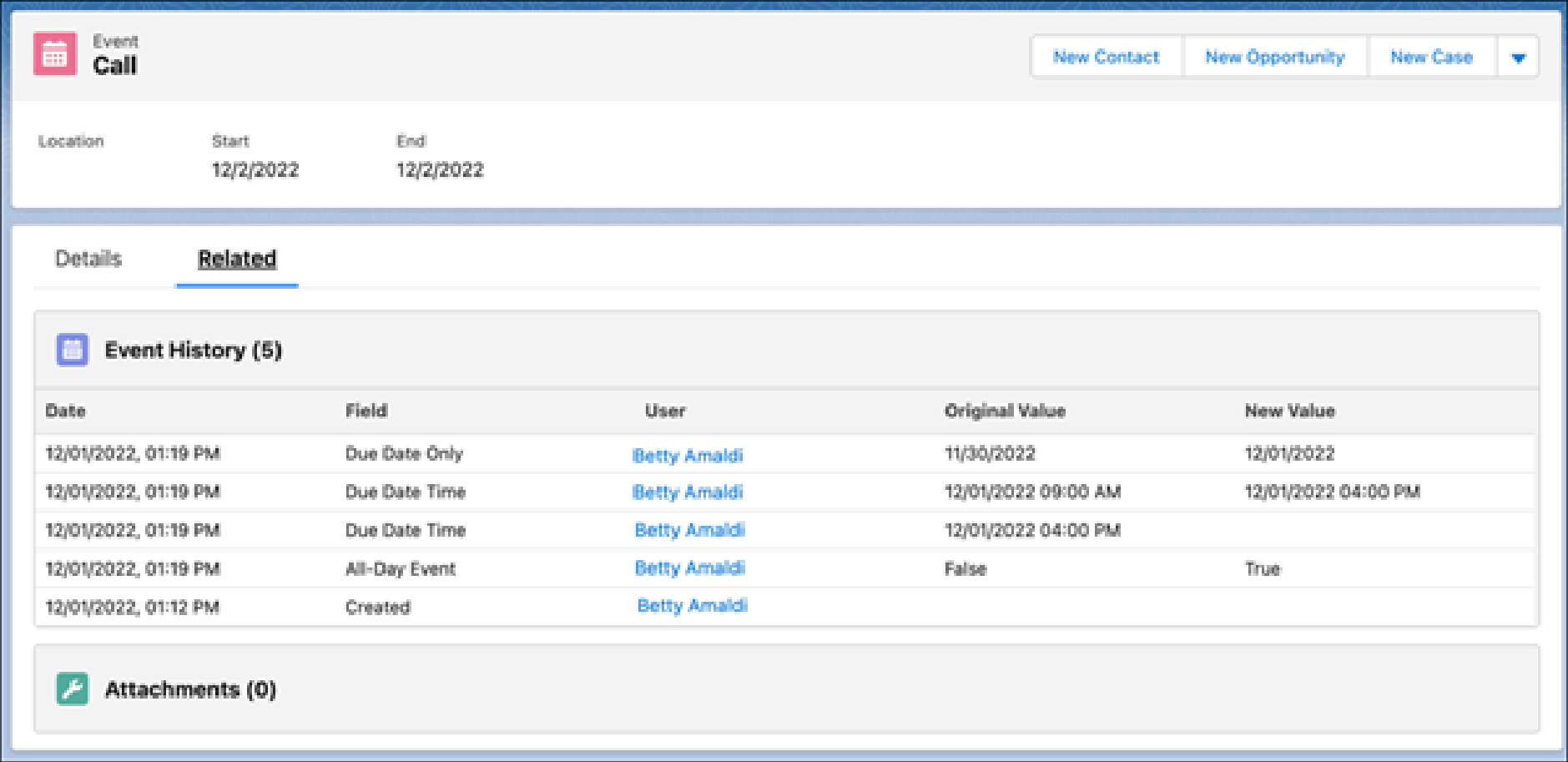

8. Track Field History for Activities

Now up to six fields for Task and Event can be tracked when Field history tracking for activities: is turned on.

9. Picklist Updates

Picklist fields got lot of new features added, like:

- Clean Up Inactive Picklist Values

- Bulk Manage Picklist Values

- Limit the Number of Inactive Picklist Values (Release Update)

- There are also two new standard picklist fields available on Leads, Contacts, and Person Accounts. Gender Identity and Pronouns are now included as optional fields.

- Capture Inclusive Data with Gender Identity and Pronouns Fields.

10. Reports and Dashboards

Reports and Dashboards have got many exciting updates.

- Creating Personalized Report Filters

You can now create dynamic report filter based on the user’s profile so that the users view records specific to them. - Subscribe to More Reports and Dashboards

In Unlimited Edition org, now Users can subscribe up to 15 reports and 15 Dashboards. Earlier it was restricted to 7. - Stay Informed on Dashboard and Report Subscriptions

You can now create a custom report type to see which reports, dashboards, or other analytic assets users have subscribed. - Stay Organized by Adding Reports and Dashboards to Collections

Now you can use collections to organize the reports and dashboards even if they exist in multiple folders. You can also pin important collections to your home page, hide irrelevant collections, and share collections with others. - Focus Your View with More Dashboard Filters

You can refine and target the dashboard data with additional filters on Lightning dashboards. Now no need to maintaining separate versions of the same dashboard for different business units and regions with only three filters. This is in beta only.

Conclusion

Salesforce Spring’23 release, I feel, will certainly not disappoint the administrators as a lot of ‘most awaited’ features seem to have made it to this release. Few features that were seen in the beta came as a pleasant surprise. I would definitely encourage you to read the release notes so that you can identify the features that are important to you.

We, at InfoVision, have a dedicated Salesforce Center of Excellence that focuses on innovation – through which we develop new salesforce competencies. We leverage lots of tools, processes and accelerators to build industry-specific use cases that pertain to global standards. We therefore follow each and every release that Salesforce makes with lots of interest and curiosity. The releases create opportunities for us to innovate and find differentiating ways by which we can solve the unmet needs of our customers.

I am happy to have more in-depth discussions on any aspect of Salesforce with those of you who are interested.