The role of the physical store has changed. With e-commerce delivering unmatched convenience, retailers can no longer compete on speed or price alone. Brick-and-mortar retailers are now focusing on creating engaging, differentiated experiences that can’t be replicated online. This shift is at the core of experiential retail, a growing strategy designed to attract, retain, and meaningfully engage customers.

In this blog, let’s look at the drivers behind experiential retail, examine how global and regional brands are implementing immersive strategies, break down the key elements of successful experiences, and explore the measurable business impact.

What is Experiential Retail?

In 2025, immersive in-store experiences are no longer “add-ons” for premium brands. They’re becoming table stakes for retailers who want to drive loyalty, foot traffic, and brand differentiation. The store is evolving from a point of sale into a destination where consumers can explore, connect, and interact with both products and the brand. These experiences rely on technology, personalization, community engagement, and purposeful design to foster long-term relationships with shoppers.

Why Experiential Retail Matters

- Consumer preferences are changing: Recent research shows that Gen Z and millennial shoppers increasingly prioritize interactive, educational in-store experiences over conventional retail environments.

- Digital and physical are blending: Consumers now move seamlessly between physical and digital touchpoints, often blending online research with in-store visits to complete their shopping journey.

- In-store differentiation is critical: Immersive formats such as events, try-ons, and personalized services help physical stores stay competitive against e-commerce alternatives.

What’s Driving the Shift?

Changing consumer expectations

Today’s shoppers are more informed and selective than ever. They routinely rely on online research, peer reviews, and price comparisons before making in-store purchases. But beyond convenience and product variety, what they truly seek are meaningful, personalized experiences. For younger generations in particular, the shopping journey is not just about buying, it’s about enjoying a seamless, engaging, and memorable interaction with the brand.

Omnichannel integration

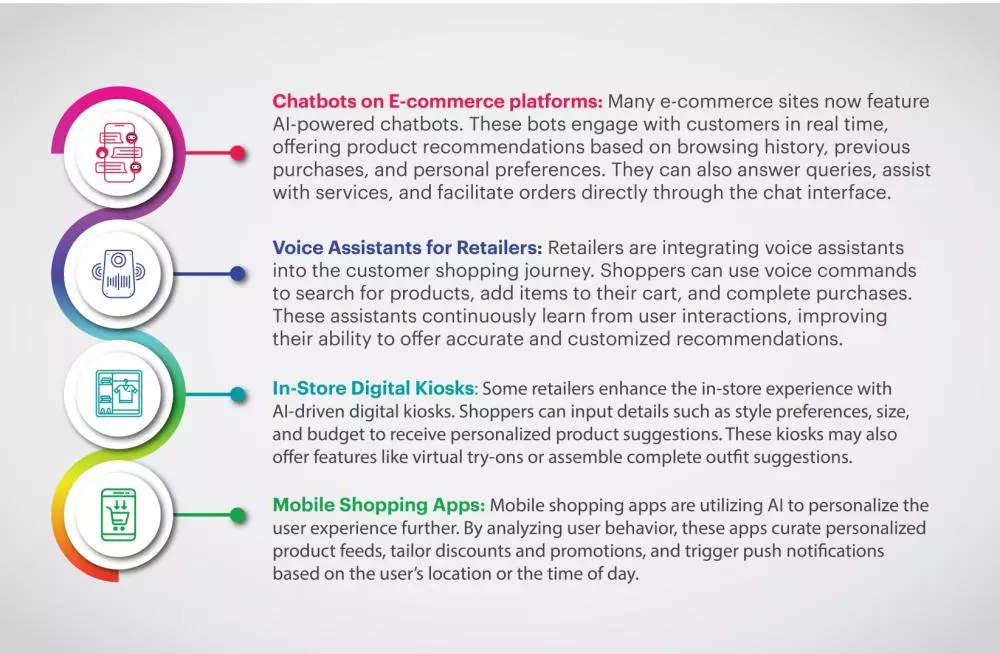

The lines between digital and physical retail continue to blur. Shoppers now expect a consistent and connected experience, whether they begin their journey online or in-store. To meet these expectations, retailers are using technology like mobile apps, virtual product previews, and intelligent support systems to create a seamless flow across all touchpoints.

Technology-led transformation

Technology is reshaping the in-store experience in dynamic ways. Innovations such as smart fitting rooms, augmented reality, and generative AI are making shopping more interactive and tailored. At the same time, connected devices are enabling retailers to gather real-time insights, allowing them to fine-tune everything from product displays to inventory management.

Key Elements of Successful Experiential Retail

Product interaction

- In-store demos and AR tools allow customers to try before they buy.

- Brands like Nike have introduced 3D sneaker customization and AR tools that allow visitors to digitally try on and personalize footwear.

Events and community

- Experiences like makeup tutorials, product launches, and pop-ups build a sense of belonging.

- Foot Locker’s “Sneaker Hub” in select US locations merges cultural events with shopping, encouraging community visits and brand loyalty.

Personalization

- According to Salesforce, 73% of consumers expect companies to understand their needs and preferences.

- Personalized recommendations, birthday offers, and behavior-based discounts are now becoming standard features in successful retail formats.

Convenience through technology

Modern shoppers value speed and simplicity. Retailers are embracing tools like contactless checkout, self-service kiosks, and mobile app support to make the in-store experience faster and more efficient. These technologies not only reduce friction but also allow customers to shop on their own terms, with minimal wait times and greater control.

Sustainability and ethics

Shoppers today are increasingly mindful of the impact their purchases have on the planet. As a result, many retailers are prioritizing sustainability by using eco-friendly materials, offering recycling programs, and designing energy-efficient store environments. Ethical practices and transparency are becoming key factors in building trust and long-term brand loyalty.

Regional and Global Examples

| Brand/Region | Experiential tactic | Impact |

| Nike (global) | AR customization, digital try-ons | Boost in loyalty and user-generated content |

| Foot Locker (US) | Try-on hubs, exclusive events | Higher store traffic and repeat visits |

| Sephora (global) | In-store beauty AR, educational workshops | Improved conversion and longer dwell times |

| Dubai malls | Pop-ups, immersive tech installations | Increased tourist footfall and social sharing |

Challenges in Implementation

- Balancing automation and human interaction: While technology enhances convenience, too much automation can lead to impersonal experiences. Successful retailers strike a balance by ensuring knowledgeable staff are available to add a human touch where it matters most.

- High upfront costs: Building immersive, experience-driven store formats often involves considerable investment in technology, space design, and training. Retailers must carefully plan and prioritize these efforts to ensure long-term value.

- Data privacy concerns: Personalized shopping relies heavily on customer data, making data protection a critical responsibility. Retailers need to maintain strong privacy practices and cybersecurity measures to protect consumer trust.

The Value of Experiential Retail

- Increased foot traffic: Memorable in-store moments bring customers back and encourage word-of-mouth promotion.

- Deeper engagement: Shoppers engage longer and more meaningfully with products, increasing average basket sizes.

- Brand differentiation: Experiential tactics help retailers stand out in saturated markets, especially when aligned with local tastes and culture.

What’s Next?

- Modular store formats: Smaller, agile stores that serve multiple purposes from retail to community events will continue to rise.

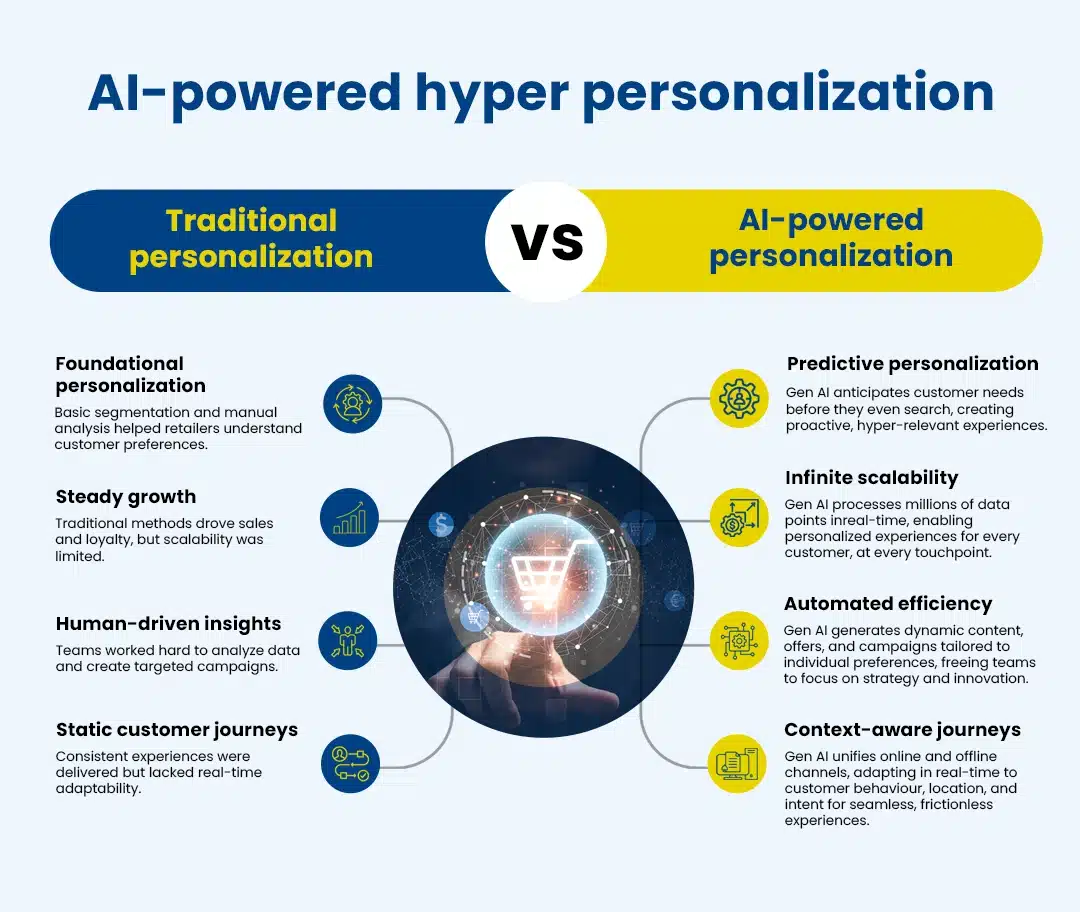

- Hyper-personalization through AI: AI will fine-tune everything from product recommendations to store layouts, based on individual shopper behavior.

- Sustainable innovation: From zero-waste packaging to renewable energy in-store, sustainability will remain a competitive differentiator.

Experiential retail is fast becoming an expectation. As retailers focus on delivering immersive, tech-enabled, and values-driven experiences, they are reshaping the role of the physical store. Brands that invest in purposeful innovation and stay aligned with customer needs will lead the future of in-store shopping.

Want to explore how InfoVision can help reimagine your in-store experience? Connect with us at digital@infovision.com. You can also download our whitepaper to dive deeper into experiential retail strategies and global case studies.