Loyal customers in retail make more repeat purchases, shop more and refer more. Organizations in the US, on average, spend 4 to 6 times more in acquiring new customers as compared to keeping old customers. From a business perspective, especially in today’s competitive landscape where customers have multiple options to choose from, customer retention is as important as customer acquisition. This is why customer loyalty needs to be carefully studied and planned in order to maximize the value of a loyal customer. Besides, a lot of retail industry studies conclude that only the quality of product does not suffice for the modern buyers. Customer service and personalized recommendations are a definite plus that can propel the pendulum swing in a given direction.

Loyalty is Valuable Even When Partial

A returning customer who repeatedly prefers to buy from one brand over another is considered as a loyal customer. Retail loyalty is different from other Brand loyalty in terms of frequency of purchase, range of products and fierce competition. Due to these reasons 100% customer loyalty is quite unlikely in retail. This does not diminish the value of a loyal customer for a retailer. Several factors play a role in influencing the customer to favor a particular brand. Convenience while shopping, satisfaction with the range of products, attractive offers and familiarity with the brand, are some such factors.

Customer Loyalty Programs

Building loyalty programs is an effective way to nudge customers to prefer your brand. 50% of US consumers use a loyalty card or app for the purchase of fuel. Around 71% of retailers offer some kind of loyalty program. For loyalty programs to really work without increasing customer friction, they need to be contextual and timely. Else, they run the risk of backfiring. Advanced technologies and innovative solutions for loyalty programs help retailers with deeper customer insights in real-time and thus make hyper-personalization possible.

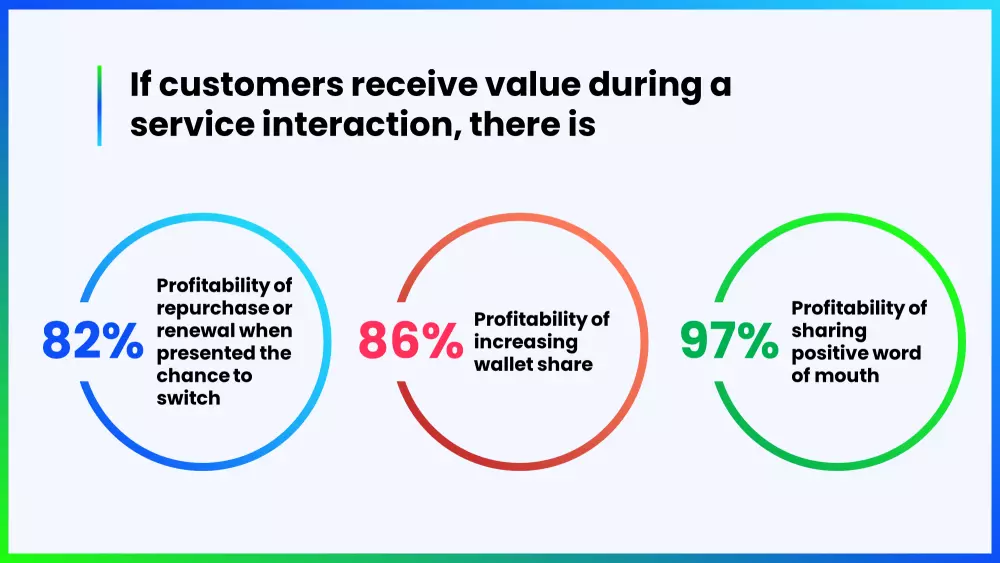

According to a Gartner Insight, “customer loyalty can be increased by performing value enhancement activities that leaves customer feeling like they can use the product better and are more confident in their purchase decision.”

Challenges with the Traditional Approach to Loyalty Programs

While loyalty programs are not a new concept, the conventional methods haven’t always made any significant impact on the retailers or their customers. Worse still, some of them backfire and are seen as a nuisance by some customers. Here are some challenges that modern, technology-backed loyalty programs should ensure that they get right.

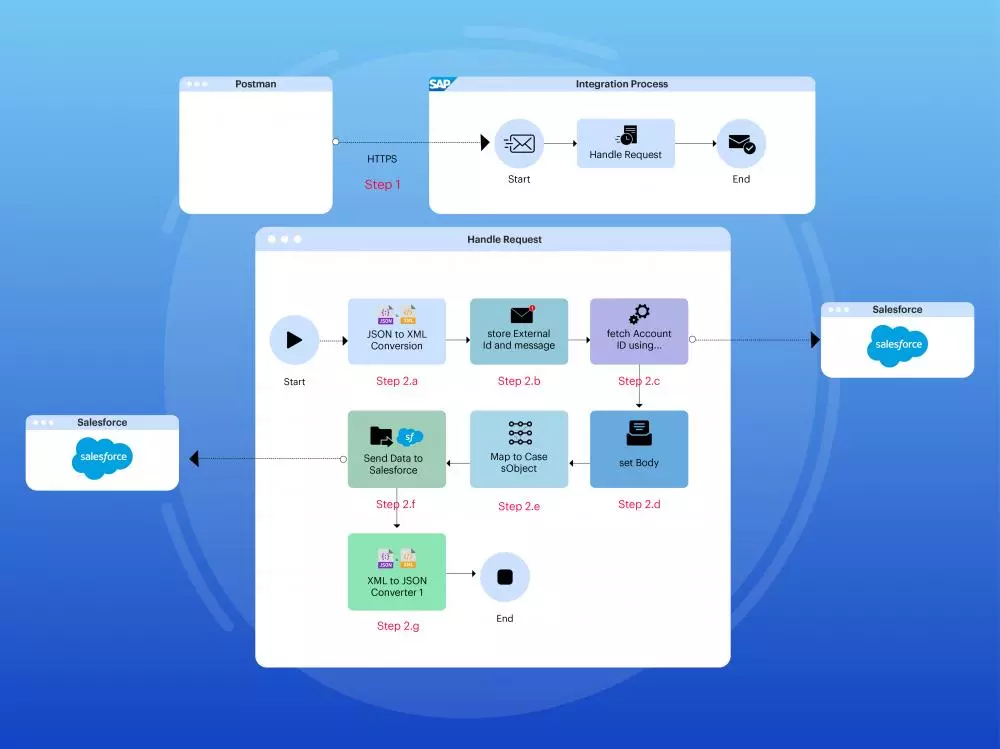

- Integration with existing systems

As retailers try to move towards omnichannel presence and digitally transform every aspect of their operations, integrating loyalty programs with these systems has not been straightforward. Without integrating a loyalty program, it is very difficult to harness the full potential of loyal customers. Retailers therefore need to carefully select their loyalty platform. Whether to use an off-the-shelf solution from a provider or develop one in-house. - Analytics

Insights on customer behavior and their response to promotional offers are needed to understand how well the loyalty program is being received. While retailers may have this data, it is usually scattered and not systematic enough to run analytics. Data insights are also crucial to ascertain if the value created for the retailer is greater than the value delivered to the customer. Any good loyalty program solution should have this capability built in. - Impersonal OffersGeneric loyalty programs are seldom relevant. Personalized rewards are much more meaningful to customers. There are several brands that fail to leverage their customer data effectively to bring the desired personalization in offers and promotions. Offering a promotion on coffee to a customer who usually purchases tea is what needs to be avoided.

- Transaction-only Focus

Most traditional approaches have a narrow view of customer loyalty which is only linked to purchases. However, every time a customer writes a review or refers your brand to others, they are displaying loyalty and can be treated as a trigger for rewards. - Not Simple Enough

With everything that goes on, the last thing customers want is hard-to-understand and difficult-to-keep-track-of loyalty programs. Similarly, if the process to redeem points is not simple enough, customers may not bother themselves and might actually be put off. Quite the opposite of what the primary intent of any loyalty program is. When technology is being used to make the buying experience as simple as possible, why shouldn’t the same principle apply to loyalty programs? - Short-term Redundant Offers

Some users may like to collect rewards in the form of redeemable points, while some may prefer membership coupons or event passes. Repetitive offers can become redundant and irrelevant. Similarly, short-term offers do not build loyalty in the long term. A good mix of offers that cover a wider range and period is more appreciated by customers.

Digital Wallets and Shared Loyalty Programs

Retailers with mature loyalty programs are now looking to offer shared loyalty programs in collaboration with other brands. This proves to be more cost-efficient for the brands and more beneficial to the users. Also known as coalition loyalty, this is done with the help of digital wallets and extensive partnerships with unrelated brands. For example, retailers may partner with fuel providers to extend the scope of their rewards and further strengthen customer loyalty. Brands with different purchase cycles also stand to gain from each other’s customer loyalty. Digital wallets or mobile wallets have given rise to a new kind of loyalty economy, where consumers can track their reward points from various brands in one location and actually use them at POS counters. With everything now being on mobile, users no longer need to keep another physical loyalty card handy.

Gamification is another new way to build engaging loyalty programs. It helps in engaging the customers, creating a sense of community or accomplishment and generating excitement for the brand. Such programs need to be highly creative and leverage the latest technologies.

The bottom line of any loyalty program is to generate profits and not to become a cost center. Knowing your customer preferences and having that data handy is the only way to create personalized and targeted promotions. Identifying the right channel (POS, POPs, SMS, in-app), the right offer and the right time may look simple but has clearly been a challenge for retailers. Partnering with an experienced loyalty solution provider like InfoVision can help to overcome most of these challenges. The team of technology and retail experts at InfoVision has successfully implemented a combination mobile fuel payment system, digital wallet, mobile checkout and customer rewards system for a leading multinational retailer.

Want to talk to our expert? Please write to us at info@infovision.com